Another new project slowly ticking away in the background.

FEED is a stage-play adaptation of a novel by M.T Anderson that is set in a disturbingly not too distant future where everyone has the internet in their heads. Everybody has the knowledge of the world at their fingertips and, as the world slides into oblivion, nobody is interested in anything beyond accessorising (sound familiar?).

I’m pretty excited about the idea at the moment. I think you could make this as a Hollywood blockbuster with shed-loads of special effects and the jaded audience wont even blink(though the story is probably better than a lot of the films I’ve seen in recent times). But no, the film buff amongst them would make comparisons to something like Tron and off they’d all trot to get a burger. But I think that to try and do something like this live on stage will make the audience sit up in their seats and take notice (and hopefully engage a little more with the content of the story).

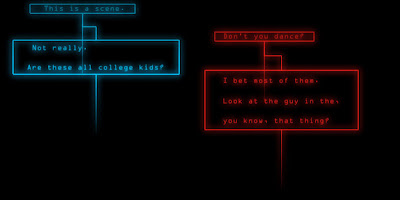

The two biggest challenges in all of this for me and are firstly to try and visualise what it might be for everyone to have the internet in their heads and secondly how to pull this off in a theatre setting. At the moment I imagine the Feed to be this swirling vortex of data (text, images, video, etc) and then within that a little sub-vortex orbiting the head of each of the characters. The items circling each of the characters is stuff that is specific to them and will indicate what they’re thinking about. The way they pass images and data from one another too will be a big part of the whole thing. And then there’s “texting” as well. Maybe a third of the script is done with text messages so there needs to be some kind of representation of that as well.

To try and do this its going to be necessary to track the performers as they move about on stage and then use that info to drive the visuals. So that the performers can just act, and not have to worry about being in the right place at exactly the right time. I’ve managed to do this so far using a Kinect and Tuio, talking to Quartz Composer, building on some of the work I did (but ultimately abandoned) for Highly Strung. I may stick with this, or I may find something better. At the moment I like the way the kinect works on infra-red light so it doesn’t get confused by projections or the lighting on the actors (or complete darkness for that matter). Ive got zero interest in xbox games but, for $150, the kinect is a great bit of kit.

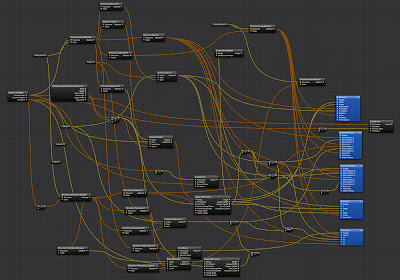

And the code to do that looks like this.

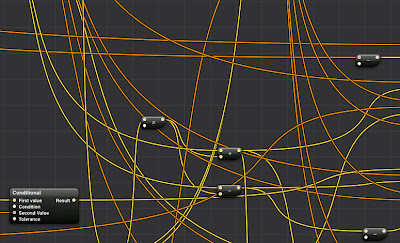

And again…In more detail…just so you can get a better idea of whats going on.

This has been my first foray into Quartz composer and what they call spaghetti code. Fortunately there are a lot of great resources out there on the internet (kineme.net in particular, I’ve learned loads from deconstructing/butchering some of the things on that site). Basically though, it’s lots of little “bits” that do “stuff” and you link them up in a big ugly tangle…

…And it makes something that looks a bit like this.

And then you can project all that onto a scrim in front of the performers.

I managed to get a bit of time in with Greg and Anna recently in between them stressing out over their performance of Oliver’s Tale on the salt lake. The shot above is of Anna doing a much better job than me of looking like a youth as we test out the texting thing.

For the Feed itself the plan is to run the show with a live connection and be doing live image searches on the internet based upon things that are relevant to the context of the show then have the results thrown into the mix with everything else. Below are the results of a couple of google image searches on a few different strings.

|

| “Disco” |

|

| “Ecological Disaster” |

|

| “Boobs, Kissing & Ulcers” (quite a heady mix) |

I does quite a nice transition from one set to the other too (if I do say so myself), taking about 10 seconds to change from one to the next as the whole thing continues swirling around. And what is great about this is that it opens up the possibility of having the audience text in stuff in real-time as well, and have that influence the feed too. The idea of have the visuals being this omnipresent thing on stage that connects everyone together, actors and onlookers, I think could end up making for a pretty powerful experience.

There’s still lots to figure out and lots more to learn. But right now I’m excited about the possibilities… and the fact that the performance will be all INDOORS and immune to the whims of the weather gods AND there will be absolutely NO giant puppets in the show. All this makes me feel positively relaxed about the whole thing. How hard can it be?

Dave: many years ago I saw a rather elaborate piece of "performance art" that was staged inside a cathedral in San Francisco. It used scrims and projections, much like you're fooling around with, as the characters walked around inside someone's head. I think it was called "virtual sho" or some such weighty thing.

What was really cool was that they projected the images in anaglyph 3-D. It was pretty astounding, really. It might be something to play around with. (As opposed to more giant puppets.)

Oh, and thank you for the nice comment on "EoG."

Dave: many years ago I saw a rather elaborate piece of "performance art" that was staged inside a cathedral in San Francisco. It used scrims and projections, much like you're fooling around with, as the characters walked around inside someone's head. I think it was called "virtual sho" or some such weighty thing.

What was really cool was that they projected the images in anaglyph 3-D. It was pretty astounding, really. It might be something to play around with. (As opposed to more giant puppets.)

Oh, and thank you for the nice comment on "EoG."

Thanks, I'll see if I can look it up. I must say though I'm not a massive fan of 3D at the best of times.

Thanks, I'll see if I can look it up. I must say though I'm not a massive fan of 3D at the best of times.

3D is not my fave, either, but I did like the look it gave to the live performance.

http://articles.latimes.com/1992-01-12/entertainment/ca-466_1_performance-art

3D is not my fave, either, but I did like the look it gave to the live performance.

http://articles.latimes.com/1992-01-12/entertainment/ca-466_1_performance-art

Sounds really cool Dave – I've mucked about a bit in QC as well.

An iPad sending tuio or plain osc could also give some real time control to the mix, I've been mucking about with the Lemur app.

Also if you have not seen Max by Cycling 74 you should have a look at that, though it is not free but does a hell of lot . . .

And maybe multiple angled 'screens'-three dimensional and than correcting for the different aspects or multiple projection as well . .

Hope all is well you you all down there

Cheers

Macciza

Sounds really cool Dave – I've mucked about a bit in QC as well.

An iPad sending tuio or plain osc could also give some real time control to the mix, I've been mucking about with the Lemur app.

Also if you have not seen Max by Cycling 74 you should have a look at that, though it is not free but does a hell of lot . . .

And maybe multiple angled 'screens'-three dimensional and than correcting for the different aspects or multiple projection as well . .

Hope all is well you you all down there

Cheers

Macciza

Hi Macciza,

I know of Max, Ive even gone so far as to download a demo but never quite got around to testing it. There's a program called Isadora that perhaps is similar. My understanding is that isadora is coming more from a theatre background (with a focus on being able to organise stuff into scenes and progress on cue from one to the next) where as Max is more coming at things from a VJ perspective where you just want to have access to everything all the time and be able to drop effects on the fly. Please correct me if I'm wrong and I really should have a good look at it myself. I have played a bit with getting all the tilt, pan, touch data out of an ipod and there's another, nother, nother project I'm starting on soon which is trying to use that stuff to drive a digital puppet so it can interact with a live performer. Just enjoying playing with things at the moment and learing new things. Ive even made it out to the mount a few times just lately. Lookout!

Hi Macciza,

I know of Max, Ive even gone so far as to download a demo but never quite got around to testing it. There's a program called Isadora that perhaps is similar. My understanding is that isadora is coming more from a theatre background (with a focus on being able to organise stuff into scenes and progress on cue from one to the next) where as Max is more coming at things from a VJ perspective where you just want to have access to everything all the time and be able to drop effects on the fly. Please correct me if I'm wrong and I really should have a good look at it myself. I have played a bit with getting all the tilt, pan, touch data out of an ipod and there's another, nother, nother project I'm starting on soon which is trying to use that stuff to drive a digital puppet so it can interact with a live performer. Just enjoying playing with things at the moment and learing new things. Ive even made it out to the mount a few times just lately. Lookout!

Hi Dave

Yeah Isadora certainly came out of theatre and is designed for it I suppose. It is a similar paradigm to QC.

Max/Jitter is really a complete programming environment from the ground up with many, many pre-packaged possibilities, from no paricular perspective. Certainly used widely in theatre and installation art. It is quite possible to build a custom scene based UI to control stuff. Connects to just about anything Kinects, Wi, tuio, OSC, MIDI, DMX, Video, Internet, LAN, Arduinos etc etc

Same thing with the nother project Max is often used as the brains in systems like these to co-ordinate things with robotics, sensors etc.

Have you seen Animata? Opensource puppet animation app.

Sounds like fun.

Only going to the Lookout? come on, matey . . .

Cheers

MM

Hi Dave

Yeah Isadora certainly came out of theatre and is designed for it I suppose. It is a similar paradigm to QC.

Max/Jitter is really a complete programming environment from the ground up with many, many pre-packaged possibilities, from no paricular perspective. Certainly used widely in theatre and installation art. It is quite possible to build a custom scene based UI to control stuff. Connects to just about anything Kinects, Wi, tuio, OSC, MIDI, DMX, Video, Internet, LAN, Arduinos etc etc

Same thing with the nother project Max is often used as the brains in systems like these to co-ordinate things with robotics, sensors etc.

Have you seen Animata? Opensource puppet animation app.

Sounds like fun.

Only going to the Lookout? come on, matey . . .

Cheers

MM

I’m loving everything about this post!They all look so beautiful.I’m in love with your style!

I’m loving everything about this post!They all look so beautiful.I’m in love with your style!